Listening tests, or subjective evaluation of audio, are an essential tool in almost any form of audio and music related research, from data compression codecs over loudspeaker design to realism of sound effects. Sadly, because of the time and effort required to carefully design a test and convince a sufficient number of participants, it is also quite an expensive process.

The advent of web technologies like the Web Audio API, enabling elaborate audio applications within a web page, offers the opportunity to develop browser-based listening tests which mitigate some of the difficulties associated with perceptual evaluation of audio. Researchers at the Centre for Digital Music and Birmingham City University’s Digital Media Technology Lab have developed the Web Audio Evaluation Tool [1] to facilitate listening test design for any experimenter regardless of their programming experience, operating system, test paradigm, interface layout, and location of their test subjects.

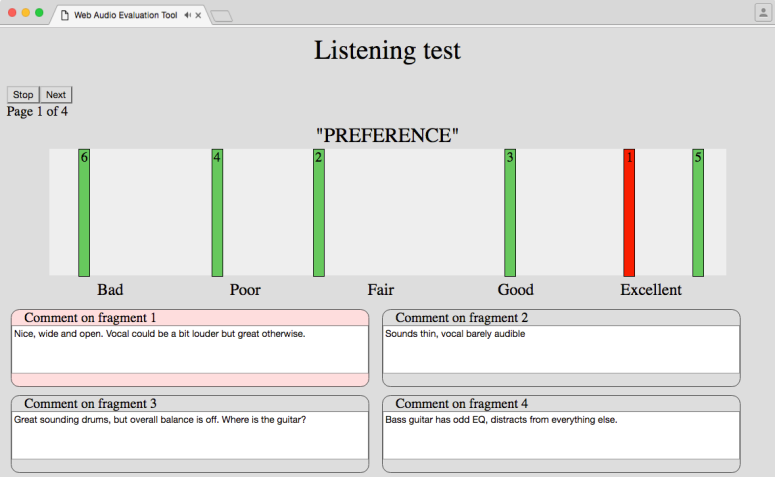

Web Audio Evaluation Tool: An example single-axis, multiple stimuli listening test with comment fields.

Here we cover some of the reasons why you would want to carry out a listening test in the browser, using the Web Audio Evaluation Tool as a case study.

Remote tests

The first and most obvious reason to use a browser-based listening test platform. If you want to conduct a perceptual evaluation study online, i.e. host a website where participants can take the test, then there are no two ways about it: you need a listening test that works within the browser, i.e. one that is based on HTML and JavaScript.

A downloadable application is rarely an elegant solution, and only the most determined participants will end up taking the test if they can get it to work. A website, however, amounts to a very low-threshold participation.

Pros

- Low effort A remote test means no booking and setting up of a listening room, showing the participant into the building, …

- Scales easily If you can conduct the test once, you can conduct it a virtually unlimited number of times, as long as you find the participants. Amazon Turk or similar services could be helpful with this.

- Different locations/cultures/languages within reach For some types of research, it is necessary to include (a high number of) participants with certain geographical locations, cultural backgrounds and/or native languages. When these are scarce nearby, and you cannot find the time or funds to fly around the world, a remote listening test can be helpful.

Cons

- Limited programming languages For the implementation of the test, you are basically constrained to using web technologies such as JavaScript. For someone used to using e.g. MATLAB or C++, this can be off-putting. This is one of the reasons we aim to offer a tool that doesn’t involve any coding for most of the use cases.

- Loss of control A truly remote test means that you are not present to talk to the participant and answer questions, or notice they misunderstand the instructions. You also have little information on their playback system (make and model, how it is set up, …) and you often know less about their background.

Depending on the type of test and research, you may or may not want to go ‘remote’ or ‘local’.

However, it has been shown for certain tasks that there is no significant difference between results from local and remote tests [2,3].

Furthermore, a tool like the Web Audio Evaluation Tool has many safeguards to compensate this loss of control. Examples of these features include

- Extensive metrics Timestamps corresponding with playback and movement events can be automatically visualised to show when participants auditioned which samples and for how long; when they moved which slider from where to where; and so on.

- Post-test checks Upon submissions, optional dialogs can remind the participant of certain instructions, e.g. to listen to all fragments; to move all sliders at least once; to rate at least one stimulus below 20% or at exactly 100%; …

- Audiometric test and calibration of the playback system An optional series of sliders shown at the start of a test, to be set by the participant so that sine waves an octave apart are all equally loud.

- Survey questions Most relevant background information on the participant’s background and playback system can be captured by well-phrased survey questions, which can be incorporated at the start or end of the test.

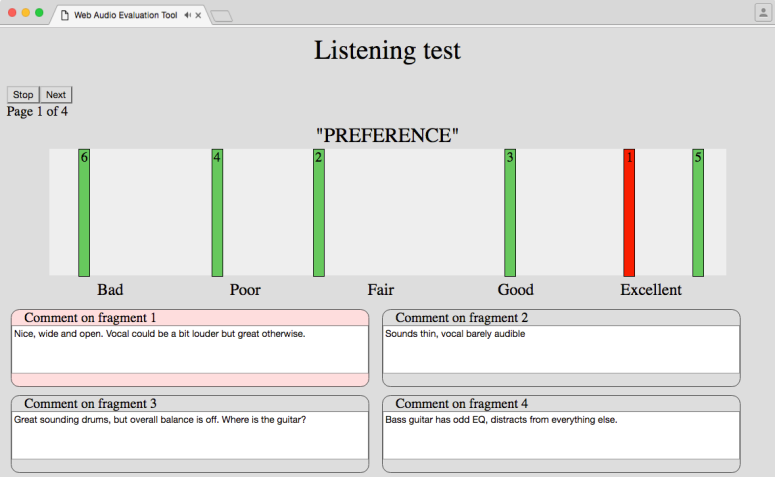

Web Audio Evaluation Tool – an example test interface inspired by the popular MUSHRA standard, typical for the evaluation of audio codecs.

Cross-platform, no third-party software needed

Source: Sewell Support

Listening test interfaces can be hard to design, with many factors to take into account. On top of that it may not always be possible to use your personal machine for (all) your listening tests, even when all your tests are ‘local’.

When your interface requires a third party, proprietary tool like MATLAB or Max to be set up, this can pose a problem as this may not be available where the test is to take place. Furthermore, upgrades to newer versions of this third party software has been known to ‘break’ listening test software, meaning many more hours of updating and patching.

This is a much bigger problem when the test is to take place at different locations, with different computers and potentially different versions of operating systems or other software.

This has been the single most important driving factor behind the development of the Web Audio Evaluation Tool, even for projects where all tests were controlled, i.e. not hosted on a web server, with ‘internet strangers’ as participants, but in a dedicated listening room with known, skilled participants. Because these listening rooms can have very different computers, operating systems, and geographical locations, using a standalone test or a third party application such as MATLAB is often very tedious or even impossible.

In contrast, a browser-based tool typically works on any machine and operating system that supports the browsers it was designed for. In the case of the Web Audio Evaluation Tool, this means Firefox, Chrome, Edge, Safari, … Essentially every browser which supports the Web Audio API.

Multiple machines with centralised results collection

Source: jaxonraye.com

Another benefit of a browser-based listening test, again regardless of whether your test takes place ‘locally’ or ‘remotely’, is the possibility of easy, centralised collection of results of these tests. Not only is this more elegant than fetching every test result with a USB drive (from any number of computers you are using), but it is also much safer to save the result to your own server straight away. If you are more paranoid (which is encouraged in the case of listening tests), you can then back up this server continually for redundancy.

In the case of the Web Audio Evaluation Tool, you just put the test on a (local or remote) web server, and the results will be stored to this server by default.

Others have put the test on a regular file server (not web server) and run the included Python server emulator script python/pythonServer.py from the test computer. The results are then stored to the file server, which can be your personal machine on the same network.

Intermediate versions of the results are stored as well, so that an outage of the test computer means the results are not lost in the event of a computer crash, a human error or a forgotten dentist appointment. The test can be resumed at any point.

Multiple participants using the Web Audio Evaluation Tool at the same time, at Queen Mary University of London

Leveraging other web technologies

Source: LinkedIn

Finally, any listening test which is essentially a website, can be integrated within other sites or enhanced with any kind of web technologies. We have already seen clever use of YouTube videos as instructions or HTML index pages tracking progression through a series of tests.

The Web Audio Evaluation Tool seeks to facilitate this by providing the optional returnURL attribute, which specifies the page the participant is redirected to upon completion of the test. This page can be anything from a Doodle to schedule the next test session, an Amazon voucher, a reward cat video, to a secret Eventbrite page for a test participant party.

Are there any other benefits to using a browser-based tool for your listening tests? Please let us know!

[1] N. Jillings, B. De Man, D. Moffat and J. D. Reiss, “Web Audio Evaluation Tool: A browser-based listening test environment,” 12th Sound and Music Computing Conference, 2015.

[2] M. Cartwright, B. Pardo, G. Mysore and M. Hoffman, “Fast and Easy Crowdsourced Perceptual Audio Evaluation,” IEEE International Conference on Acoustics, Speech and Signal Processing, 2016.

[3] M. Schoeffler, F.-R. Stöter, H. Bayerlein, B. Edler and J. Herre, “An Experiment about Estimating the Number of Instruments in Polyphonic Music: A Comparison Between Internet and Laboratory Results,” 14th International Society for Music Information Retrieval Conference, 2013.

This post originally appeared in modified form on the Web Audio Evaluation Tool Github wiki.