The Ig Nobel prizes are tongue-in-cheek awards given every year to celebrate unusual or trivial achievements in science. Named as a play on the Nobel prize and the word ignoble, they are intended to ‘“honor achievements that first make people laugh, and then make them think.” Previously, when discussing graphene-based headphones graphene-based headphones, I mentioned Andre Geim, the only scientist to have won both a Nobel and Ig Nobel prize.

I only recently noticed that the 2017 Ig Nobel Peace Prize went to an international team that demonstrated that playing a didgeridoo is an effective treatment for obstructive sleep apnoea and snoring. Here’s a photo of one of the authors of the study playing the didge at the award ceremony.

My own nominees for Ig Nobel prizes, from audio-related research published this past year, would included ‘Influence of Audience Noises on the Classical Music Perception on the Example of Anti-cough Candies Unwrapping Noise’, which we discussed in our preview of the 143rd Audio Engineering Society Convention, and the ‘The DFA Fader: Exploring the Power of Suggestion in Loudness Judgments’ , for which we had the blog entry ‘What the f*** are DFA faders‘.

But lets return to Digeridoo research. Its a fascinating aboriginal Australian instrument, with a rich history and interesting acoustics, and produces an eerie drone-like sound.

A search on google scholar, once removing patents and citations, shows only 38 research papers with Didgeridoo in the title. That’s great news if you want to be an expert on research in the subject. The work of Neville H. Fletcher over about a thirty year period beginning in the early 1980s is probably the main starting point.

The passive acoustics of the didgeridoo are well understood. Its a long truncated conical horn where the player’s lips at the smaller end form a pressure-controlled valve. Knowing the length and diameters involved, its not to difficult to determine the fundamental frequencies (often around 50-100 Hz) and modes excited, and their strengths, in much the same way as can be done for many woodwind instruments.

But that’s just the passive acoustics. Fletcher pointed out that traditional, solo didgeridoo players don’t pay much attention to the resonant frequencies and they’re mainly important when its played in Western music, and needs to fit with the rest of an ensemble.

Things start getting really interesting when one considers the sounding mechanism. Players make heavy use of circular breathing, breathing in through the nose while breathing out through the mouth, even more so, and more rhythmically, than is typical in performing Western brass instruments like trumpets and tubas. Changes in lip motion and vocal tract shape are then used to control the formants, allowing the manipulation of very rich timbres.

Its these aspects of didgeridoo playing that intrigued the authors of the sleep apnoea study. Like the DFA and cough drop wrapper studies mentioned above, these were serious studies on a seemingly not so serious subject. Circular breathing and training of respiratory muscles may go a long way towards improving nighttime breathing, and hence reducing snoring and sleep disturbances. The study was controlled and randomised. But, its incredibly difficult in these sorts of studies to eliminate or control for all the other variables, and very hard to identify which aspect of the didgeridoo playing was responsible for the better sleep. The authors quite rightly highlighted what I think is one of the biggest question marks in the study;

A limitation is that those in the control group were simply put on a waiting list because a sham intervention for didgeridoo playing would be difficult. A control intervention such as playing a recorder would have been an option, but we would not be able to exclude effects on the upper airways and compliance might be poor.

In that respect, drug trials are somewhat easier to interpret than practice-based intervention. But the effect was abundantly clear and quite strong. One certainly should not dismiss the results because of limitations (the limitations give rise to question marks, but they’re not mistakes) in the study.

- Milo A. Puhan, Alex Suarez, Christian Lo Cascio, Alfred Zahn, Markus Heitz and Otto Braendli, “Didgeridoo Playing as Alternative Treatment for Obstructive Sleep Apnoea Syndrome: Randomised Controlled Trial ,” BMJ, vol. 332 December 2006.

- Fletcher, Neville. “The didjeridu (didgeridoo).” Acoustics Australia 24 (1996): 11-16.

- Tarnopolsky, Alex, et al. “Acoustics: the vocal tract and the sound of a didgeridoo.” Nature 436.7047 (2005): 39.

- Pilch, Adam, et al. “Influence of Audience Noises on the Classical Music Perception on the Example of Anti-cough Candies Unwrapping Noise.” Audio Engineering Society Convention 143, 2017.

- Haigh, Jack, and Malachy Ronan. “The DFA Fader: Exploring the Power of Suggestion in Loudness Judgments.” Audio Engineering Society Convention 142, 2017.

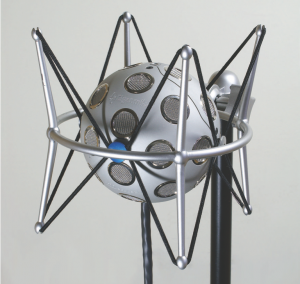

The Eigenmike

The Eigenmike