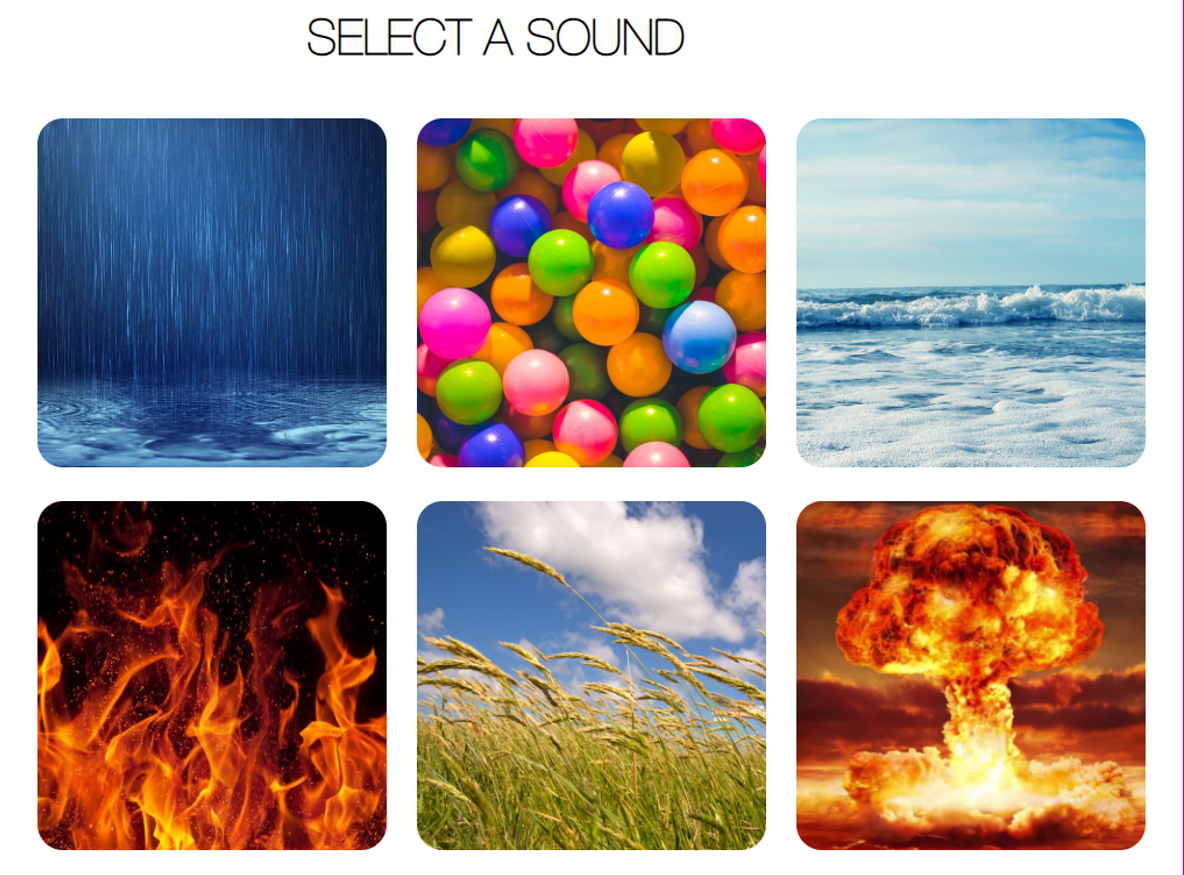

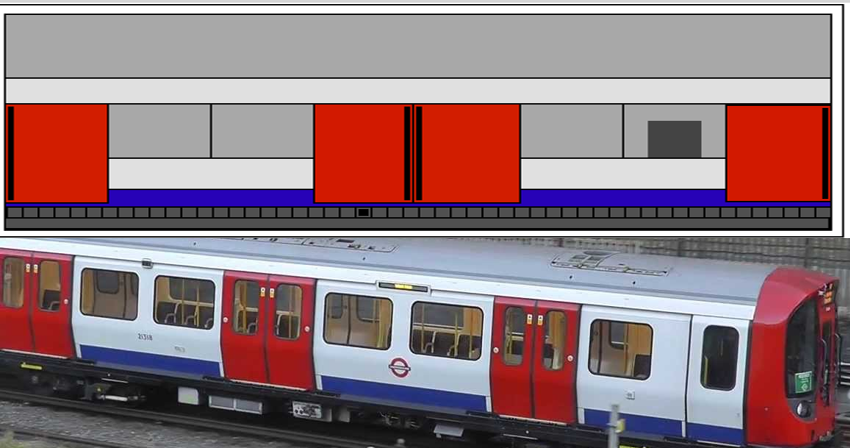

Recently, we’ve been doing a lot of audio development for applications running in the browser, like with the procedural audio and sound synthesis system FXive, or the Web Audio Evaluation Tool (WAET). The Web Audio API is part of HTML5 and its a high level Application Programming Interface with a lot of built-in functions for processing and generating sound. The idea is that its what you need to have any audio application (audio effects, virtual instruments, editing and analysis tools…) running as javascript in a web browser.

It uses a dataflow model like LabView and media-focused languages like Max/MSP, Pure Data and Reaktor,. So you create oscillators, connect them to filters, combine them and then connect that to output to play out the sound. But unlike the others, its not graphical, since you write it as JavaScript like most code that runs client-side on a web browser.

Sounds great, right? And it is. But there were a lot of strange choices that went into the API. They don’t make it unusable or anything like that, but it does sometimes leave you kicking in frustration and thinking the coding would be so much easier if only… Here’s a few of them.

- There’s no built-in noise signal generator. You can create sine waves, sawtooth waves, square waves… but not noise. Generating audio rate random numbers is built in to pretty much every other audio development environment, and in almost every web audio application I’ve seen, the developers have redone it themselves, with ScriptProcessors, AudioWorklets, buffered noise Classes or methods.

- The low pass, high pass, low shelving and high shelving filters in the Web Audio API are not the standard first order designs, as taught in signal processing and described in [1, 2] and lots of references within. The low pass and high pass are resonant second order filters, and the shelving filters are the less common alternatives to the first order designs. This is ok for a lot of cases where you are developing a new application with a bit of filtering, but its a major pain if you’re writing a web version of something written in MATLAB, Puredata or lots and lots of other environments where the basic low and high filters are standard first order designs.

- The oscillators come with a detune property that represents detuning of oscillation in hundredths of a semitone, or cents. I suppose its a nice feature if you are using cents on the interface and dealing with musical intervals. But its the same as changing the frequency parameter and doesn’t save a single line of code. There are other useful parameters which they didn’t give the ability to change, like phase, or the duty rate of a square wave. https://github.com/Flarp/better-oscillator is an alternative implementation that addresses this.

- The square, sawtooth and triangle waves are not what you think they are. Instead of the triangle wave being a periodic ramp up and ramp down, they are the sum of a few terms in the Fourier series that approximate this. This is nice if you want to avoid aliasing, but wrong for every other use. It took me a long time to figure this out when I tried modulating a signal by a square wave to turn it on and off. Again, https://github.com/Flarp/better-oscillator gives an alternative implementation with the actual waveforms.

- The API comes with a biquad filter that allows you to create almost arbitrary infinite impulse response filters. But you can’t change the coefficients once its created. So its useless for most web audio applications, which involve some control or interaction.

Despite all that, its pretty amazing. And you can get around all these issues since you can always write your own audio worklets for any audio processing and generation. But you shouldn’t have to.

We’ve published a few papers on the Web Audio API and what you can do with it, so please check them out if you are doing some R&D involving it.

[1] J. D. Reiss and A. P. McPherson, “Audio Effects: Theory, Implementation and Application“, CRC Press, 2014.

[2] V. Valimaki and J. D. Reiss, ‘All About Audio Equalization: Solutions and Frontiers,’ Applied Sciences, special issue on Audio Signal Processing, 6 (5), May 2016.

[3] P. Bahadoran, A. Benito, T. Vassallo, J. D. Reiss, FXive: A Web Platform for Procedural Sound Synthesis, Audio Engineering Society Convention 144, May 2018

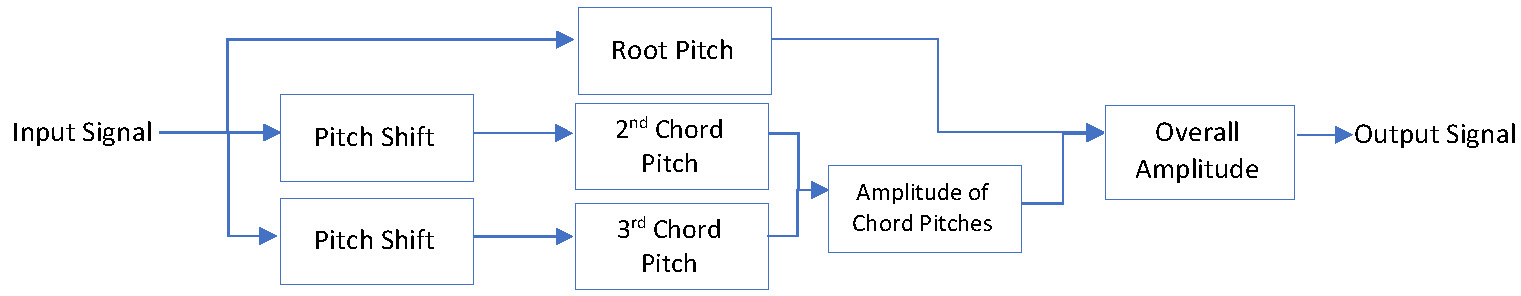

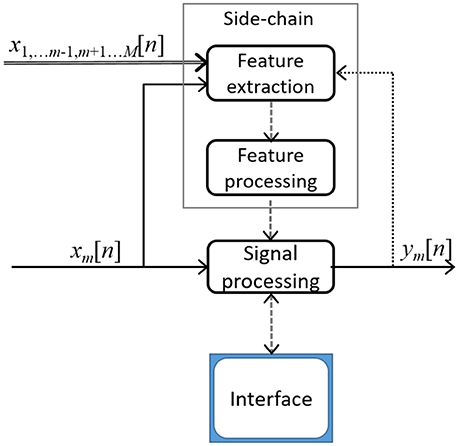

[4] N. Jillings, Y. Wang, R. Stables and J. D. Reiss, ‘Intelligent audio plugin framework for the Web Audio API,’ Web Audio Conference, London, 2017

[5] N. Jillings, Y. Wang, J. D. Reiss and R. Stables, “JSAP: A Plugin Standard for the Web Audio API with Intelligent Functionality,” 141st Audio Engineering Society Convention, Los Angeles, USA, 2016.

[6] N. Jillings, D. Moffat, B. De Man, J. D. Reiss, R. Stables, ‘Web Audio Evaluation Tool: A framework for subjective assessment of audio,’ 2nd Web Audio Conf., Atlanta, 2016

[7] N. Jillings, B. De Man, D. Moffat and J. D. Reiss, ‘Web Audio Evaluation Tool: A Browser-Based Listening Test Environment,’ Sound and Music Computing (SMC), July 26 – Aug. 1, 2015